- Parallels 13 discount how to#

- Parallels 13 discount serial#

- Parallels 13 discount code#

- Parallels 13 discount series#

Parallels 13 discount code#

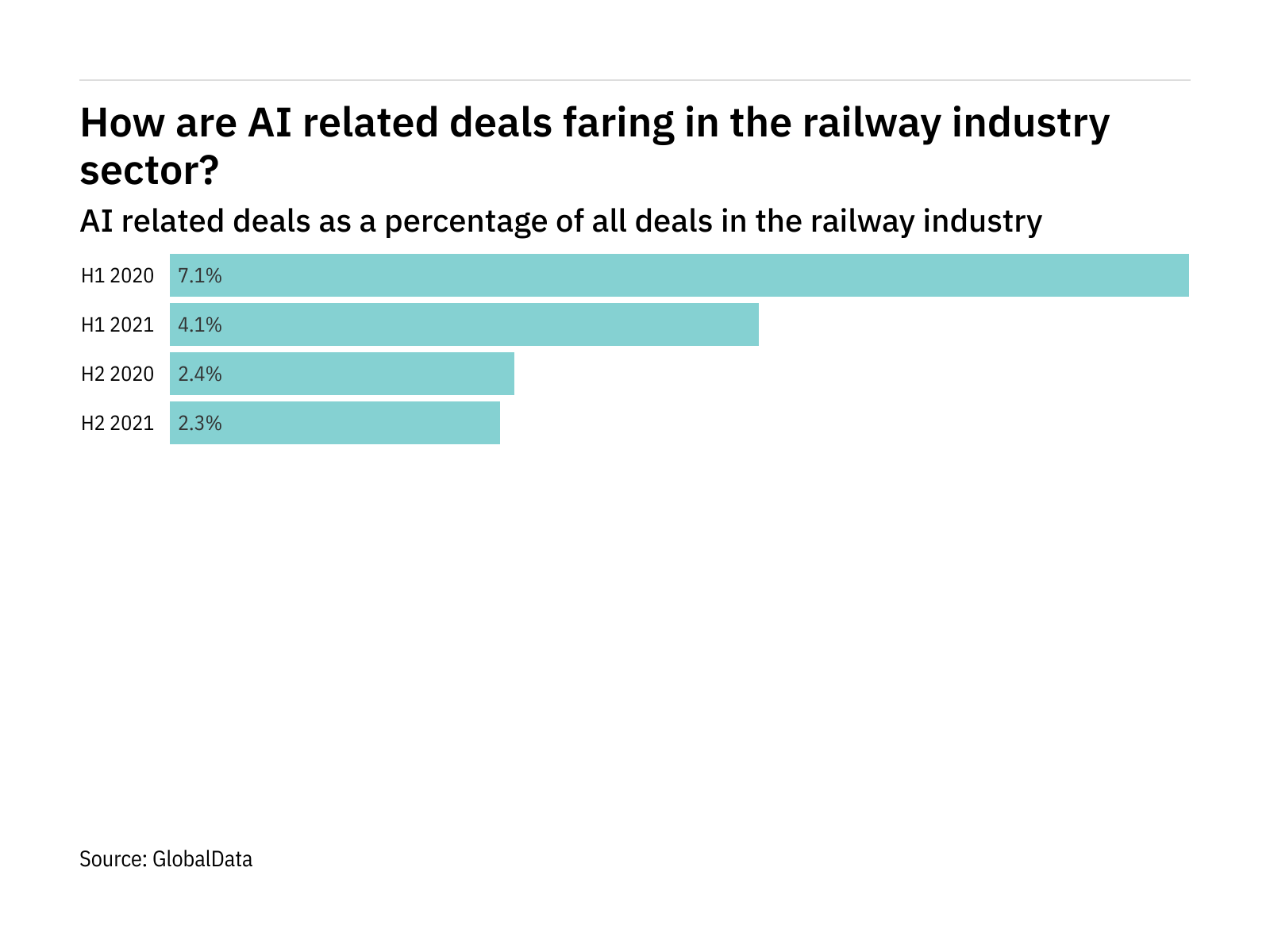

Observed speedup of a code which has been parallelized, defined as:

Fine: relatively small amounts of computational work are done between communication events.Coarse: relatively large amounts of computational work are done between communication events.In parallel computing, granularity is a quantitative or qualitative measure of the ratio of computation to communication. Synchronization usually involves waiting by at least one task, and can therefore cause a parallel application's wall clock execution time to increase. The coordination of parallel tasks in real time, very often associated with communications. There are several ways this can be accomplished, such as through a shared memory bus or over a network. Parallel tasks typically need to exchange data. As a programming model, tasks can only logically "see" local machine memory and must use communications to access memory on other machines where other tasks are executing. In hardware, refers to network based memory access for physical memory that is not common. Shared memory hardware architecture where multiple processors share a single address space and have equal access to all resources - memory, disk, etc. In a programming sense, it describes a model where parallel tasks all have the same "picture" of memory and can directly address and access the same logical memory locations regardless of where the physical memory actually exists. Shared Memoryĭescribes a computer architecture where all processors have direct access to common physical memory. Pipeliningīreaking a task into steps performed by different processor units, with inputs streaming through, much like an assembly line a type of parallel computing. A parallel program consists of multiple tasks running on multiple processors. A task is typically a program or program-like set of instructions that is executed by a processor. The matrix below defines the 4 possible classifications according to Flynn:Ī logically discrete section of computational work.Each of these dimensions can have only one of two possible states: Single or Multiple. Flynn's taxonomy distinguishes multi-processor computer architectures according to how they can be classified along the two independent dimensions of Instruction Stream and Data Stream.One of the more widely used classifications, in use since 1966, is called Flynn's Taxonomy.Examples are available in the references. There are a number of different ways to classify parallel computers.More info on his other remarkable accomplishments: Flynn's Classical Taxonomy The basic, fundamental architecture remains the same. Parallel computers still follow this basic design, just multiplied in units. Input/Output is the interface to the human operator.Arithmetic Unit performs basic arithmetic operations.Control unit fetches instructions/data from memory, decodes the instructions and then sequentially coordinates operations to accomplish the programmed task.Data is simply information to be used by the program.Program instructions are coded data which tell the computer to do something.Read/write, random access memory is used to store both program instructions and data.

Parallels 13 discount series#

Parallels 13 discount serial#

Traditionally, software has been written for serial computation: Overview What Is Parallel Computing? Serial Computing References are included for further self-study.

Parallels 13 discount how to#

The tutorial concludes with several examples of how to parallelize several simple problems.

These topics are followed by a series of practical discussions on a number of the complex issues related to designing and running parallel programs. The topics of parallel memory architectures and programming models are then explored. The tutorial begins with a discussion on parallel computing - what it is and how it's used, followed by a discussion on concepts and terminology associated with parallel computing. It is not intended to cover Parallel Programming in depth, as this would require significantly more time. As such, it covers just the very basics of parallel computing, and is intended for someone who is just becoming acquainted with the subject and who is planning to attend one or more of the other tutorials in this workshop. It is intended to provide only a brief overview of the extensive and broad topic of Parallel Computing, as a lead-in for the tutorials that follow it. This is the first tutorial in the "Livermore Computing Getting Started" workshop. Distributed Memory / Message Passing Model.Potential Benefits, Limits and Costs of Parallel Programming.

0 kommentar(er)

0 kommentar(er)